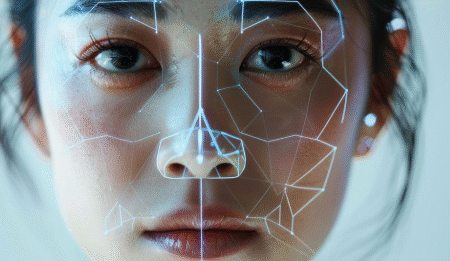

In a world where reality and illusion go hand in hand, deepfakes do not merely deceive the eye—they inflict pain. They bruise reputations, shatter trust, destabilize institutions, and wreak havoc with the very concept of truth. Even though the machinery of deepfakes might be breathtaking, its effects are usually devastating. The victims are not merely presidents or pop stars—but your neighbor, your classmate, your parents, your kids. When Scams Wear a Familiar Face Picture getting a video call from the CEO of your company—hastily, calmly, and reassuringly familiar. He requests you wire money instantly for an acquisition. It sounds like him. The demeanor is the same. You comply, only to learn that you were talking to an empty space. This isn’t science fiction—it’s already occurred. In one instance, a multinational company wired more than $20 million to scammers who employed a fake replica of the CEO to stage the robbery. The most chilling aspect? It was virtually impossible to tell the real from the fake—until it was much too late. This is not just limited to the boardroom. Scammers today impersonate celebrity voices, creating fake endorsement testimonials for questionable products. These are slick, convincing, and hard to refute—particularly for the elderly or vulnerable citizens who are less versed in AI deception. The consequence? Shattered confidence, pilfered funds, and rising fear. Politics Under Siege Now picture an election week in a weak democracy. Then, out of nowhere, a clip comes up of the front-runner promising communal violence or admitting to vote rigging. It goes viral on WhatsApp channels and TikTok. Even if it’s soon disproved, doubt has been sown. Trust fractures. Votes change. A democracy shakes. We have glimpsed shadows of this situation already. In Ukraine, a deepfake of President Zelenskyy telling soldiers to surrender shook national morale temporarily before it was revealed to be a lie. In the UK and US, deepfake audio recordings have impersonated political figures making racist or inflammatory comments. The threat of disruption is immense. When democracy is used as a stage of illusions, who are the public supposed to trust? Personal Dignity Exploited The most stomach-churning effect of deepfakes is probably the invasion of personal privacy—particularly against women and teenagers. AI software is being applied to “nudify” individuals, producing realistic non-consensual pornography. These aren’t mere pictures. They are weapons of humiliation, blackmail, and psychological warfare. In Australia, a popular sports presenter was appalled to discover that her famous face had been superimposed on explicit photos that were being shared online. Students in India and South Korea have even been subjected to harassment and humiliation by classmates through deepfake nudes. The victims are silently suffering—most too afraid to come forward, not knowing whether the law can assist them, and traumatized by having their trust broken. No one is exempt. Your daughter’s school picture. A friend’s selfie on social media. A coworker’s vacation video. In mere moments, these can be manipulated and made into something ghastly. The damage that remains is not digital—it is psychological, social, and deeply human. Our Eyes Deceive Us What is frightening about deepfakes is how well they can emulate the real. Research indicates that we humans can only correctly identify deepfakes 24% to 62% of the time, depending on the context. That implies that the majority of us are getting it wrong more often than we realize. And worse, we’re also arrogant—convinced that we know the difference when we actually don’t. The reality is, we’ve moved into a new era where an eye no longer confirms what it sees. If video can’t be relied upon, what becomes of witness testimony? Of journalism? Of evidence in court? Of the emotional connections we make through glimpsing a loved one’s face or listening for their voice? The illusion isn’t merely visual—it’s one of existence. Social Media: The Breeding Ground Social media virality is kerosene on fire. Social platforms such as X, Instagram, and TikTok pay attention to interaction, not accuracy. A salacious deepfake goes further and wider than any fact-check soberly done. In India, 77% of misinformation is created on social media—where algorithms don’t ask “Is it true?” but “Will it go viral? This is not by chance. These sites’ designs favor shock rather than substance. The more sensational the material, the greater the number of clicks, and the stronger it survives. Here, deepfakes are not just invited—they’re rewarded. And that leaves each of us in an ongoing state of uncertainty, suspended between fact and fiction. The New Faces of Bullying and Blackmail We worried about schoolyard bulling. Now we worry about deepfake bulling. Teenagers are producing videos of students doing things they never did—saying things they never said. The damage is devastating. Some victims are ashamed to even complain. Others are gaslighted, told it’s “just AI,” or “not real,” when the harm they experience is very real. In their most evil forms, deepfakes are being used for sextortion. A kid is coerced into thinking that there are incriminating photos, when there aren’t. Or an actual photo is manipulated just enough to be a weapon. The threat is chilling. And the emotional consequences—shame, guilt, fear—are toxic to young minds. What’s at Stake Let us be unambiguous: deepfakes are not a prank or a trend. They are an ethical test for our society. They challenge us to consider: do we cherish truth over convenience, trust over traffic, humanity over clicks. If left unchecked, deepfakes will lead us down a path where nothing can be believed, no one can be trusted, and every image, every voice, every story is suspect. That is a world without truth. And a world without truth is a world without justice, without community, without hope. Hope Is Still Possible But here’s the good news—resistance is on the rise. Technologies are developing to detect and flag manipulated media. New legislation, such as the U.S. TAKE IT DOWN Act, is being proposed to safeguard victims. Nations such as Australia are incorporating deepfake awareness into schools. India is considering reforms under its future Digital

CONNECTING THOUGHTS